The advent of artificial intelligence (AI) tools has transformed the way students interact with knowledge, said Professor Bertha Alicia Rosales at the 2025 National Faculty Summit (RNP for its initials in Spanish).

However, it has also brought new challenges for teachers, who face the dilemma of ensuring a responsible and educational use of these technologies in their classrooms.

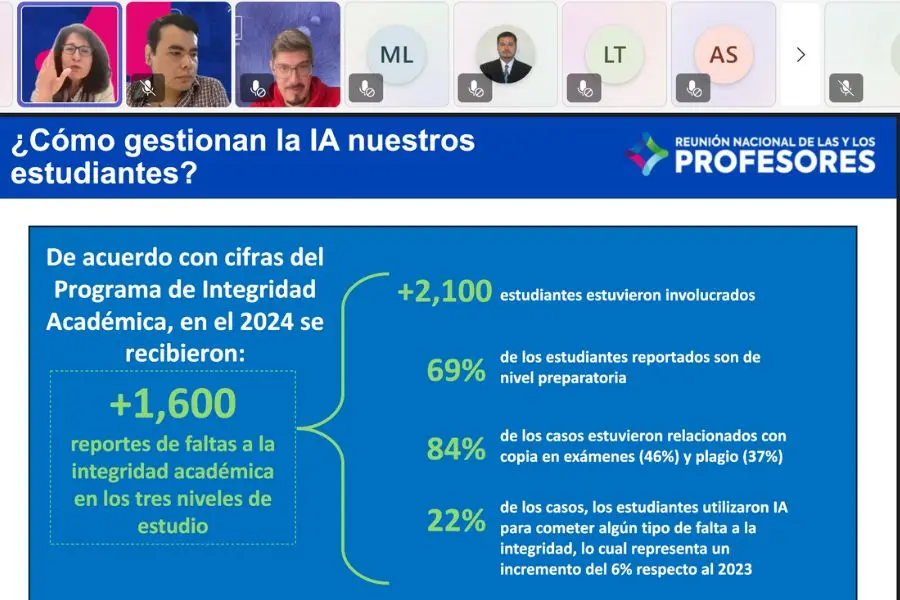

“According to figures from the (Tec’s) Academic Integrity Program, there were more than 1,600 reports of academic integrity violations at the three levels of education in 2024.

“This indicates to us that students aren’t self-managing properly, as they are using these technologies such as artificial intelligence to obtain an academic advantage through plagiarism (...) which compromises our teaching work,” she said.

As such, the ambassador of the Program for Strengthening Academic Integrity shared some key recommendations for incorporating AI in the classroom without losing sight of ethical values and meaningful learning.

12 tips for ethically using AI in the classroom

1. Be clear and explicit with your students from the start

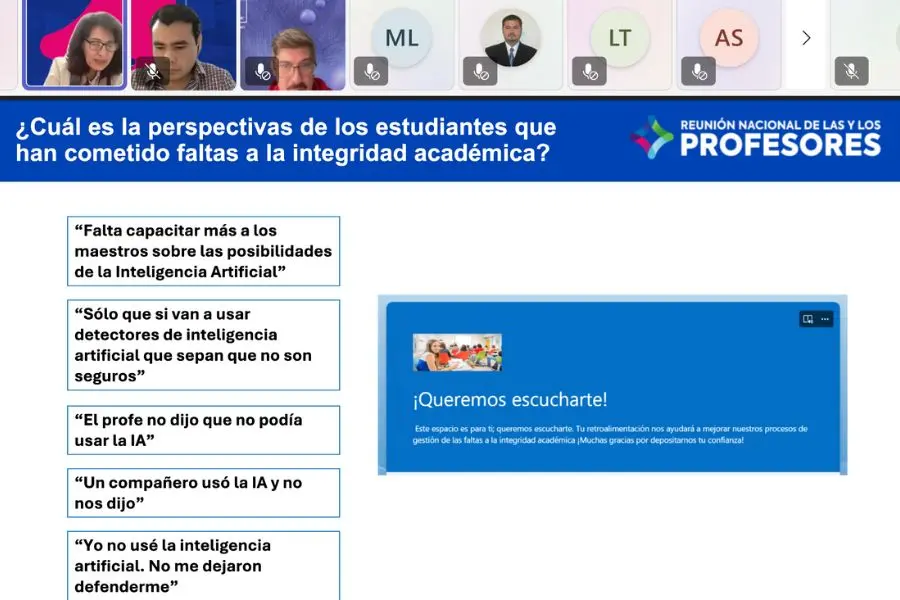

One of the main conflicts detected by Rosales is a lack of communication between teachers and students regarding the permitted use of AI, as some students don’t know if they can use it or don’t ask.

“The teacher didn’t say I couldn’t use AI,” was one of the recurring complaints from students punished.

“Students have to manage themselves and need to know that even if the teacher doesn’t say anything, they have to go and ask if they can use it.”

The solution, according to the teacher, is to include a section in course planning that states whether the use of AI tools is allowed and under what conditions.

“Just explain it; they’ll understand. That way, you can present yourself as responsible for the decisions made in the classroom,” she said.

2. Train yourself before integrating it into the class

AI is not a fad but a powerful tool. For Rosales, the first step is to overcome the fear or automatic rejection that teachers may experience.

“At one time, I was in a moral panic. I was a writing teacher and felt violated. However, now it’s an ally for me. I use it quite often and with confidence,” she said.

Knowing how platforms such as ChatGPT work, what biases they may have, and how a model is trained is part of a teacher’s duty because only then will it be possible to make informed decisions about their educational application, she said.

“It’s also important to take the time to talk to students about their perception of the use of these technologies, their usefulness, whether or not they see them as a fad,” she added.

3. Evaluate AI tools critically

Not all AI tools are reliable, she warned, explaining that they can often have serious flaws such as making up quotes, delivering erroneous data, and replicating biases without users realizing it.

“You’re responsible for the effect AI has on the teaching-learning process. The veracity and quality of the materials generated need to be ensured.

“Informed use of the tools will allow you to know their functionalities and limitations,” she stressed.

In addition, the ambassador also invites students to review and validate the information provided by the AI, indicating that it is not enough to copy and paste; they must learn to question and contrast the information.

4. Reinforce digital literacy in your group

Although many teachers may think that young people were born “digitized,” the truth is that they are just learning to manage these new tools and therefore need guidance, said the speaker.

“Our students are in an immersion process that’s just beginning. It’s a good time to commit ourselves to teaching them responsibility,” stressed Rosales.

As ethical digital literacy is not the sole responsibility of parents or laws, the professor explained how teachers should remember the formative role that is key to this mentoring process.

5. Talk about ethics and values in class

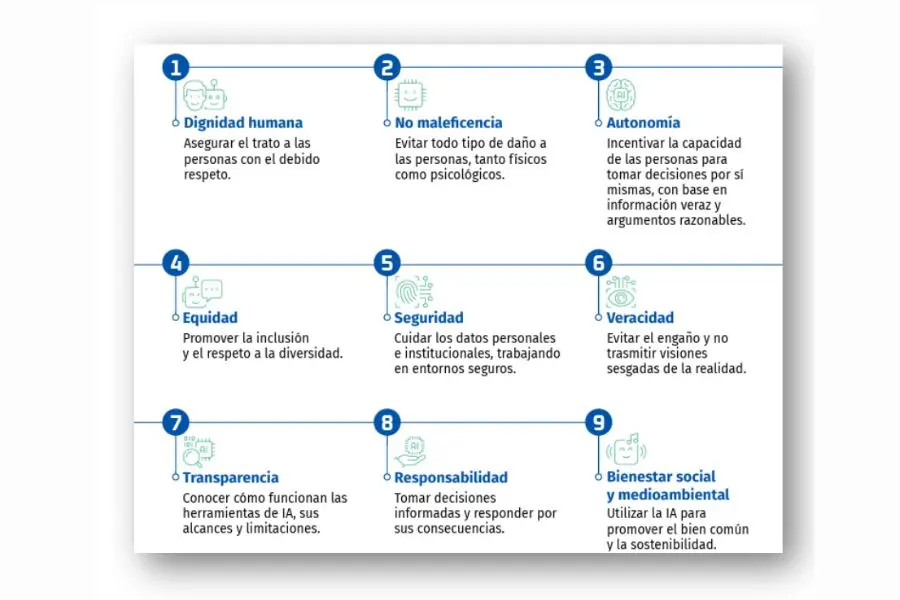

How do we train competent professionals who do not use technology to gain an unfair advantage or commit misconduct? The way forward, according to Rosales, is to return to fundamental values.

“If their technical knowledge isn’t accompanied by moral virtues, students put the common good at risk,” she warned.

Thus, the ambassador pointed out the importance of inviting students to reflect on principles such as autonomy, truthfulness, and responsibility as values that should guide their decision-making on the use of AI.

6. Promote intrinsic motivation

We cannot police what every student does, nor can we rely solely on punishment as a method of control. The real goal, Rosales explains, is for students to develop a moral compass of their own.

“When students act only for reward or punishment, then they’ll do what they want when no one is looking,” she said. “We need the motivation to be guided by values to come from within.”

Creating spaces where students value learning for its own sake (and not just for a grade) can make a big difference in ethical AI management.

7. Share whether you use AI and why

More and more teachers are using AI to generate ideas, write feedback, and design lessons. Therefore, she recommends that if you use it, be transparent, inform your students, and justify how and why you do so.

“If you’ve used it, you have to justify why you used it and ensure that there was objectivity and fairness in grading,” she said.

This not only builds trust but also teaches by example how to use these tools in an ethical and conscientious manner.

8. Design meaningful tasks and assessments

AI makes it easier to cheat, Rosales acknowledges, but it can also enrich learning if projects are well designed.

The key is to build activities that require critical thinking, creativity, and follow-through.

“Cheating is getting cheaper, easier, and harder to detect. Therefore, any activity without monitoring is doomed to have pitfalls,” she warned.

Opt for projects in stages, with constant feedback and spaces for reflection. This way, you reduce the risk of misuse and encourage commitment, said the expert.

9. Open spaces for dialogue with your group

Many students make mistakes due to lack of information or because they do not feel listened to, something that can culminate in them being punished for using AI tools without necessarily malicious intentions.

Rosales proposed generating spaces for conversation with students about their experiences with AI, as these spaces can help prevent conflicts and strengthen teacher support.

“Ask them if these technologies work for them, if they see them as a fad, or if they use them in a meaningful way. Continue asking those questions in front of them,” she said.

The teacher also explained that in cases of dishonest use of AI, it is advisable to talk to students beforehand so that they understand its different uses beyond just the repercussions of the offense.

10. Inform students about the consequences of misuse

It is not enough to have rules if they are not communicated because, according to figures shared by Rosales, 22% of academic misconduct at the Tec in 2024 involved AI as a means of copying or plagiarizing.

“The misuse of AI isn’t itself an offense, but it is when an offense is committed using it. And that has consequences,” she explained.

Remember to clarify to the class how the use of AI for dishonest academic advantage may involve penalties such as failing grades or reports for lack of integrity on a case-by-case basis.

11. Be aware of the use of personal data

Because AI feeds on information, Rosales mentions that teachers should be aware of the algorithms, interests, and large volumes of data behind these tools.

“Our data is a treasure for many companies. We all leave a digital footprint,” she warned.

Find out about the privacy policies of the tools you use and teach your students to learn about and critically analyze them as well.

Remember also that platforms such as EduTools can help you recommend and navigate AI tools that have been tested and rated as safe by the institution.

12. Follow the institutional guidelines and help them to evolve

In addition to classroom regulations, the speaker pointed out that the Tec has guidelines on the ethical use of AI, stressing that it is not enough to read the document; it must be put into practice.

“Culture does not come about through decree. We have to follow the guidelines, appropriate their values, and help them to evolve in line with the technology,” Rosales concluded.

Finally, Rosales said that ethics is not static; it is built every day, and she encouraged teachers to continue exploring and to foster open dialogue about the implications of these tools in the classroom.

“Our commitment to education forces us to rethink not only our pedagogies, teaching methods, and assessment models, but the content and competencies we hope to develop in our students.

“In some cases, we need to rethink the purposes of the educational process,” she pointed out.

2025 National Faculty Summit

The National Faculty Summit is the annual meeting of Tec de Monterrey’s Higher Education academic community, offering opportunities for learning, institutional alignment, roundtable discussions, and moments of celebration.

This year, it was held in a hybrid format, with two days of online conferences and workshops and two days in person on the Monterrey campus under the theme From our Faculty: Building Bridges to the Future.

“It’s a year in which we’ve promoted key projects on our path to 2030 and our shared goal of attracting the most talented people to come collaborate and study with us.

“Imagine and build a better world from within the Tec,” said Roberto Íñiguez Flores, the Tec’s Executive Vice Rector for Academic Affairs and Faculty.

From July 1 to 4, faculty members participated in the exchange of best practices and activities to strengthen faculty development and wellbeing.

ALSO READ: